Is ChatGPT safe? A cybersecurity guide

As use of generative AI and other innovative tools like ChatGPT becomes more widespread, it's critical to understand how safe they are. In this article, we’ll explore the built-in safety features of ChatGPT, how it uses data, and ChatGPT scams and other threats to be aware of. And get Norton 360 Deluxe to strengthen your defenses and help protect your digital life in the ever-evolving online landscape.

Is ChatGPT safe to use?

While there are ChatGPT privacy concerns and examples of ChatGPT malware scams, the game-changing chatbot has many built-in guardrails and is seen as generally safe to use.

However, as with any online tool, especially new ones, it’s important to practice good digital hygiene and stay informed about potential privacy threats as well as the ways that the tool can potentially be misused.

With its ability to write human-like content, ChatGPT has quickly become popular among students, professionals, and casual users. But with all the hype surrounding it, and early stories about GPT’s tendency to hallucinate, concerns around ChatGPT safety are warranted.

Here we’ll unpack the key things you need to know about ChatGPT safety, including examples of what OpenAI, the company behind ChatGPT, does to protect users. We’ll also explore ChatGPT malware and other security risks, and discuss key tips for how to use ChatGPT safely.

ChatGPT security measures meant to protect you

ChatGPT has a set of robust measures aimed at ensuring your privacy and security as you interact with the AI. Below are some key examples:

- Annual security audits: ChatGPT undergoes an annual security audit carried out by independent cybersecurity specialists, who attempt to identify potential vulnerabilities to ensure ChatGPT’s security measures are sound.

- Encryption: All data transferred between you and ChatGPT is encrypted. That means the data is scrambled into a type of code that can be unscrambled only after it reaches the intended recipient (you or the AI).

- Strict access controls: Strict access control means only authorized individuals can reach sensitive areas of ChatGPT’s inner workings or code base.

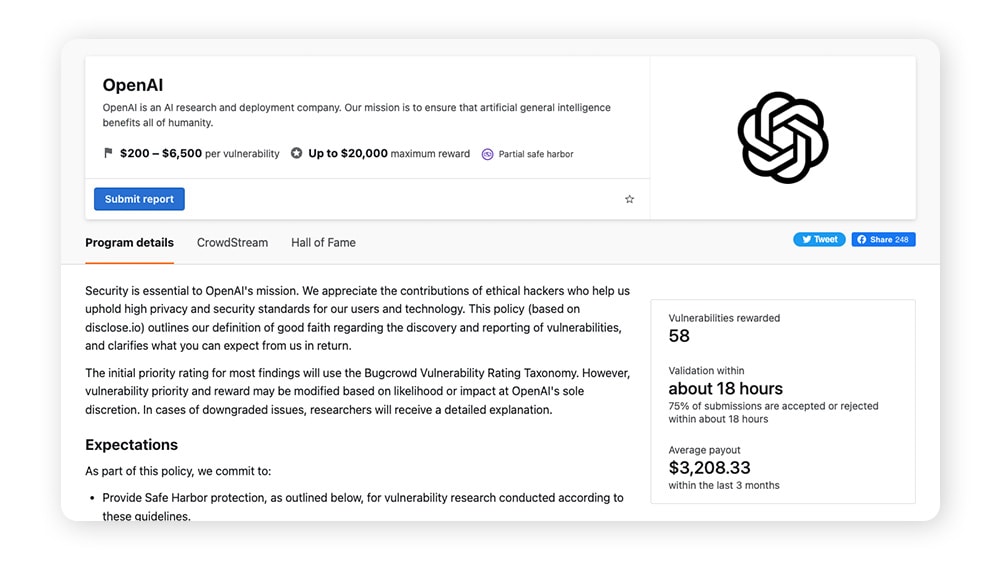

- Bug Bounty Program: ChatGPT’s Bug Bounty Program encourages ethical hackers, security researchers, and tech geeks to hunt for and report any potential security weaknesses or “bugs.”

ChatGPT data collection uses to recognize

Being aware of how a website, application, or chatbot uses your personal data is an important step in protecting yourself from sensitive data exposure.

Here are the key areas to know about OpenAI’s collection and use of your data:

- Third-party sharing: Though OpenAI shares content with, as it describes, “a select group of trusted service providers,” it claims not to sell or share user data with third parties like data brokers, who would use it for marketing or other commercial purposes.

- Sharing with vendors and service providers: OpenAI shares some user data with third-party vendors and service providers in the context of maintaining and improving its product.

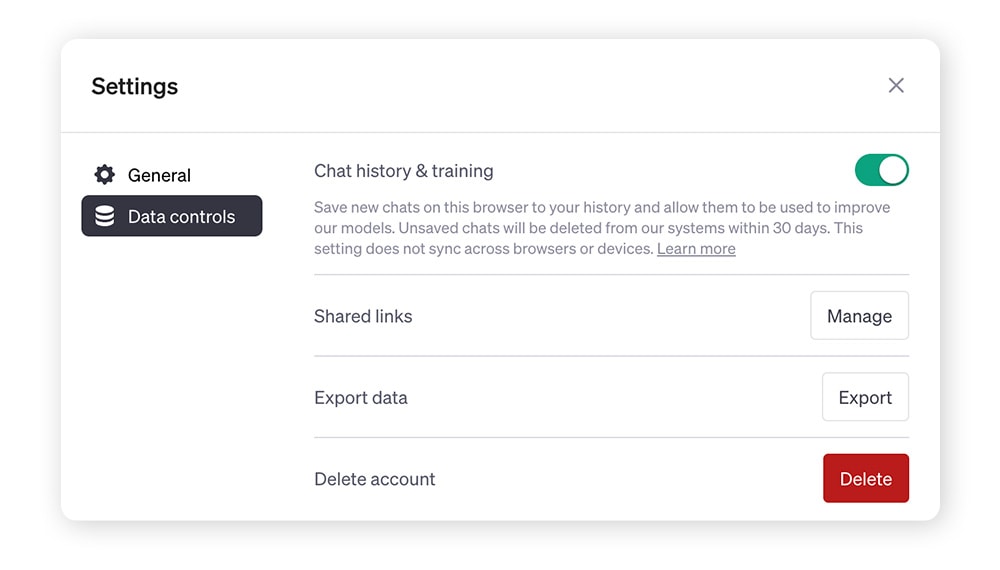

- Improving natural language processing ability: Unless you opt out of this feature by turning off your chat history, all conversations you have with ChatGPT are stored and used to further train and improve the AI model.

- Data storage and retention: OpenAI “de-identifies” your data to make it anonymous, and stores it securely, in compliance with regional data protection regulations such as the European Union’s GDPR.

ChatGPT scams and risks to avoid

While it has lots of safety measures in place, ChatGPT is not without risk. Learn more about some of the key ChatGPT security risks and scams below.

Data breaches

A data breach is when sensitive or private data is exposed without authorization — it could then be accessed and used to benefit a cybercriminal. For example, if personal data you shared in a conversation with ChatGPT is compromised, it could put you at risk of identity theft.

- Real-life example: In April 2023, a Samsung employee accidentally shared top-secret source code with the AI, after which the company banned the use of popular generative AI tools.

Even accidental data exposure can lead to serious consequences. It's essential to have a proactive approach to safeguarding your personal information online.

Phishing

Phishing is a set of manipulative tactics cybercriminals use to trick people into giving away sensitive information like passwords or credit card details. Certain types of phishing like email phishing or clone phishing involve scammers impersonating a trusted source such as your bank or employer.

One weakness of phishing tactics is the presence of telltale signs like poor spelling or grammar. But ChatGPT can now be used by scammers to craft highly realistic phishing emails — in many languages — that can easily deceive people.

- Real-life example: In March 2023, the European Union Agency for Law Enforcement Cooperation (Europol) issued a statement warning about the threat of AI-generated phishing, stating that ChatGPT’s “ability to reproduce language patterns to impersonate the style of speech of specific individuals or groups…could be used by criminals to target victims.”

Malware development

Malware is malicious software cybercriminals use to gain access to and damage a computer system or network. All types of malware require computer code, meaning hackers generally have to know a programming language to create new malware.

Scammers can now use ChatGPT to write or at least “improve” malware code for them. Although ChatGPT has guardrails in place to prevent such things from happening, there have been cases of users managing to bypass those restrictions.

- Real-life example: In April 2023, a security researcher reported having found a loophole that allowed him to create sophisticated malware using ChatGPT. The technology he created disguises itself as a screensaver app, and it can steal sensitive data from a Windows computer. It’s also disturbingly difficult to detect — in a test, only five out of 69 detection tools caught it.

Catfishing

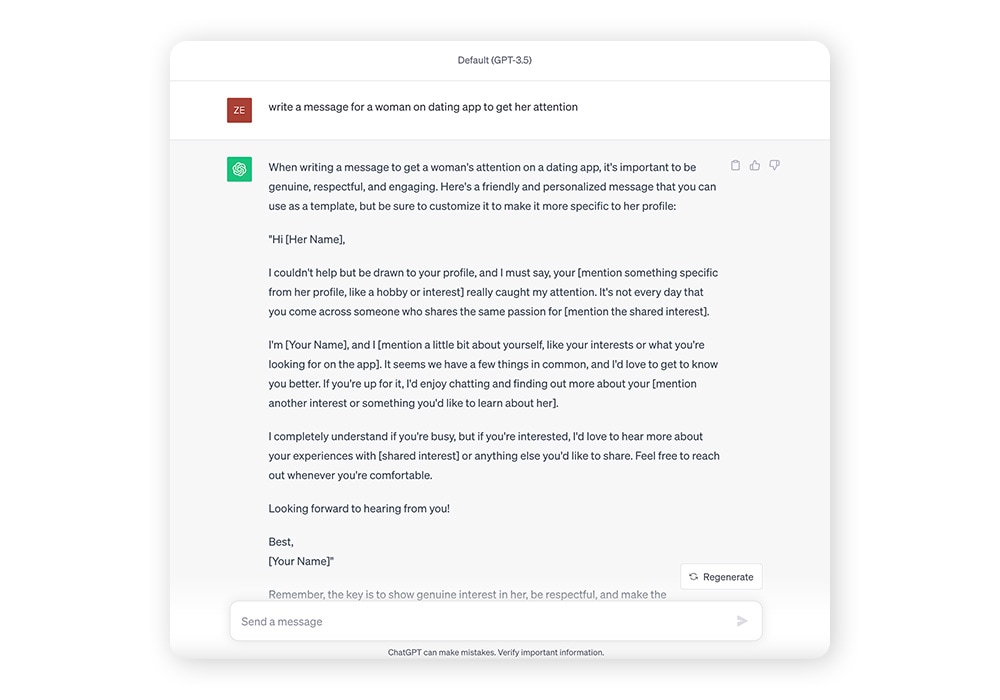

Catfishing is a deceptive practice of creating a false online identity to trick others for malicious purposes like scams or identity theft. Like most social engineering tactics, catfishing requires excellent impersonation skills. But hackers could use ChatGPT to create more realistic conversations or even to impersonate specific people.

- Real-life example: While it’s not always deceitful, people on dating apps like Tinder and Bumble have been using ChatGPT to write messages. This shows how quickly we’re adopting the technology in daily life and how hard it’s becoming to tell if a message is from a human or an AI.

Misinformation

Some of the risks associated with using ChatGPT don’t even need to be deliberate or malicious to be harmful.

ChatGPT’s strength is its ability to imitate the way humans write and use language. While the large language model is trained on vast amounts of data and can answer many complex questions accurately (it earned a B grade in a university-level business course!), it has been known to make serious errors and generate false content — a phenomenon called “hallucination.” Whenever you use ChatGPT or any other generative AI, it’s crucial to fact-check the information it outputs, as it can often make stuff up and be very convincing.

- Real-life example: A lawyer used ChatGPT to prepare a court filing in a personal injury case against an airline. Among other errors, ChatGPT cited six non-existing court decisions in the document.

Whaling

Whaling is a cyber attack that targets a high-profile individual like a business executive or senior official within an organization, usually with the aim of stealing sensitive information or committing financial fraud.

While businesses can protect themselves from many attacks by using cybersecurity best practices, whaling often exploits human error rather than software weaknesses. A hacker could potentially use ChatGPT to create realistic emails that can bypass security filters and be used in a whaling attack.

- Real-life example: In July 2023, news of a malicious ChatGPT clone called WormGPT came out. Cybercriminals appear to be using WormGPT to launch Business Email Compromise (BEC) attacks, which involve hackers posing as company executives.

Fake ChatGPT apps

Cybercriminals are tricking users into downloading fake ChatGPT apps. Some of these fake ChatGPT apps are “fleeceware” — used to extract as much money as possible from users by charging high subscription fees for services that barely function. That may allow them to be sold on Google Play and the Apple App Store without being detected.

Other ChatGPT app scams are more proactively malicious. For instance, a hacker could send a phishing email inviting you to try ChatGPT. In reality, it is a scam that takes you to a malicious website or installs ransomware on your device.

- Real-life example: In April 2023, it was reported that hundreds of fake ChatGPT clones were being sold on Google’s Play Store.

6 tips to protect yourself while using ChatGPT

Despite ChatGPT’s security measures, as with any online tool, there are risks. Here are some key safety tips and best practices for staying safe while using ChatGPT:

- Avoid sharing sensitive information. Remember to keep personal details private and never disclose financial or other confidential information during conversations with ChatGPT.

- Review privacy policies. Read OpenAI’s privacy policy to understand how your data is handled and what level of control you have over it.

- Use an anonymous account. Consider using an anonymous account to interact with ChatGPT for an added digital privacy barrier. Note that you may have to provide and verify a phone number to sign up.

- Use strong password security. Follow good password security practices by creating strong, unique passwords and changing them periodically to keep your account secure.

- Stay educated. Stay updated about the latest artificial intelligence security issues and related online scams. Knowledge is power when it comes to cybersecurity.

- Use antivirus software. Good antivirus software like Norton 360 Deluxe can help safeguard your device from potential cyber threats. Get Norton 360 Deluxe now and start protecting your device and your data.

Using artificial intelligence safely

While OpenAI ensures that ChatGPT is built with safety and privacy at its core, it’s important for users to be proactive about their online safety.

Norton 360 Deluxe is built on top of powerful, heuristic anti-malware technology to help guard against new and emerging malware threats. Plus, it features a built-in VPN to encrypt the information you send and receive online and help protect your personal data. Those benefits are paramount to help mitigate risks associated with chatbot scams, such as data breaches or malware attacks.

Help protect your digital life today with Norton 360 Deluxe.

FAQs about ChatGPT safety

Is ChatGPT safe to download?

If you’re being prompted to download ChatGPT to your desktop, it may be a scam — the standard version of ChatGPT is not downloadable software, it’s a cloud-based web application. However, there are safe Apple and Android apps available to install on your mobile device, or you can install the ChatGPT browser extension.

Is it safe to give ChatGPT your phone number?

While OpenAI may ask for your phone number when you first make a ChatGPT account, that is different from sharing your phone number while chatting with the AI. The information you share when signing up is private and secure, but anything you share in a chat may not be.

Is ChatGPT free to use?

Yes, ChatGPT offers a free version, currently powered by the ChatGPT-3.5 model. There's also a paid version available, powered by the newer ChatGPT-4 model.

Why did ChatGPT get banned?

Some countries, including North Korea, China, and Russia, restrict the use of ChatGPT due to privacy or political concerns. Though Italy banned its use in early 2023, access has since been restored. Certain companies have also banned its use for similar reasons.

Is ChatGPT confidential?

No. Unless you disable chat history, everything you type into ChatGPT could potentially be used to further train the AI. Even if you have chat history disabled, OpenAI retrains the information for 30 days before deleting. Remember to never share sensitive or personal information with the chatbot.

How can you delete your chats on ChatGPT?

You can delete individual chats by clicking the trash icon next to each saved topic. You can also delete your entire chat history at once by clicking the three dots next to your account name, choosing Settings, then clicking Clear next to Clear all chats.

Is ChatGPT regulated?

Because artificial intelligence is such a new and quickly evolving field, there aren’t a lot of international regulations in place yet to control its use. U.S. President Joe Biden issued an executive order in October 2023 to help ensure the safe use of AI. And many parts of the world have data privacy laws that could apply to the use of AI, such as the EU’s GDPR.

In December 2023, the EU passed the EU AI Act to help establish a framework to ensure the safe development and use of AI, including chatbots like ChatGTP. It’s uncertain when the law will take effect, but in the meantime, business and other organizations will need to set up compliance strategies to align their practices with the provisions of the Act.

ChatGPT is a trademark of OpenAI OpCo, LLC.

Editorial note: Our articles provide educational information for you. Our offerings may not cover or protect against every type of crime, fraud, or threat we write about. Our goal is to increase awareness about Cyber Safety. Please review complete Terms during enrollment or setup. Remember that no one can prevent all identity theft or cybercrime, and that LifeLock does not monitor all transactions at all businesses. The Norton and LifeLock brands are part of Gen Digital Inc.

Want more?

Follow us for all the latest news, tips and updates.